Data Poisoning as an Attack Vector

As artificial intelligence (AI) and its associated activities of machine learning (ML) and deep learning (DL) become embedded in the economic and social fabric of developed economies, maintaining the security of these systems and the data they use is paramount. The global cyber security market was estimated by IDC to be worth $107 billion in 2019, growing to $151 billion by 2023. Most of this will be spent on services based around software and hardware designed to protect systems against intrusions by hackers seeking to steal data or compromise networks. However, an area of concern often missed is the integrity and reliability of the data which is being used for the training datasets relied on by ML algorithms. Data poisoning could become a significant attack vector used by hackers to undermine AI systems and the organisations building businesses and processes around them.

This is a potential ticking time bomb. Just as works by some of the great art fakers of the twentieth century still poison the art market decades after they were painted, so “fake” or mislabeled data could undermine organisations or even countries years after being planted by malicious actors.

Types of Data Poisoning

Marcus Comiter produced a useful report in late 2019 for the Belfer Center on the vulnerabilities of AI systems that breaks down attacks into 2 broad categories:

- Input Attacks – where the data fed into an AI system is manipulated to affect the outputs in a way that suits the attacker;

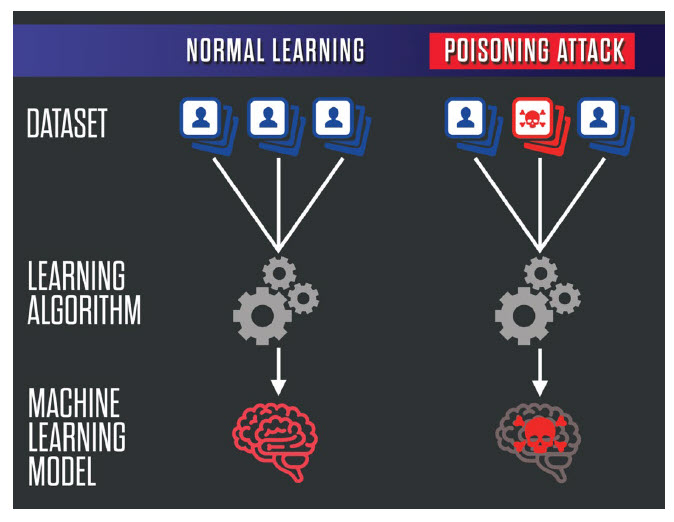

- Poisoning Attacks – these occur earlier in the process when the AI system is being developed and typically involves corrupting the data used in the training stage.

Data poisoning attacks can take several forms. They may involve corrupting a valid or clean dataset by, for example, mislabelling images or files so that the AI algorithm produces incorrect answers. Images of a particular person could be labelled “John” when they are actually “Mary”. Perhaps more seriously, images of specific military vehicles could be wrongly categorised to favour a hostile foreign power. Figure 1 illustrates this, supporting the computer science trope, “Garbage In – Garbage Out”.

A second method of data poisoning involves corrupting the data before it is introduced into the AI training process. In many ways, this can be easier for “poisoners” to carry out as it does not require a hacker to breach the security systems of a third party. Infecting the wells of data used to train AI systems is, perhaps, the area of greatest concern as it may be very difficult or even impossible to detect. By the time such a poisoning attack is discovered it may be too late as the AI systems could already be deployed.

This represents a new challenge: even if data is collected with uncompromised equipment and stored securely, what is represented in the data itself may have been manipulated by an adversary in order to poison downstream AI systems. This is the classic misinformation campaign updated for the AI age. (Comiter, 2019)

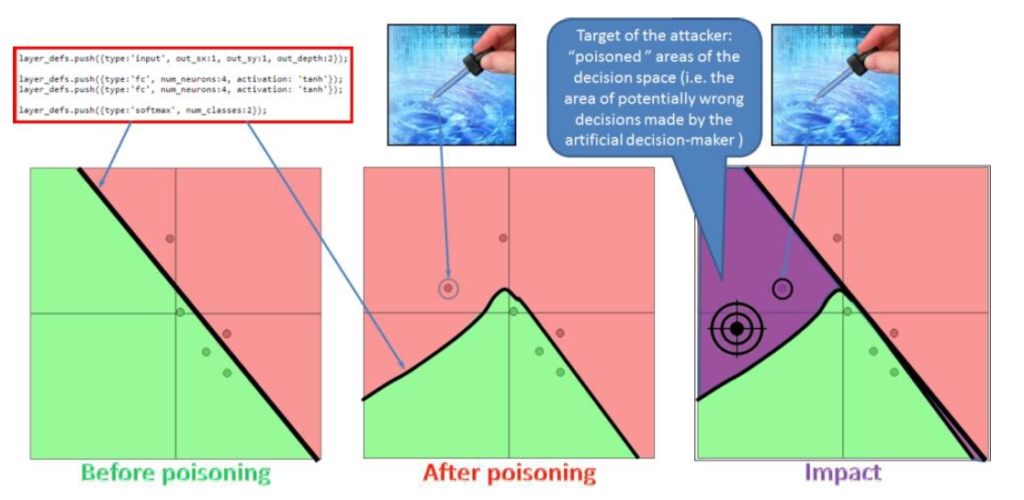

Terziyan (2018) adds another layer of subtlety to data poisoning attacks by classifying attacks such as those described above as active (the injection of fake data) and others as passive (hiding truthful data). His research shows the devasting impact on an AI system outputs that just removing a single “NO” point in a training set of data can have.

Similar results were obtained by changing a “NO” to “YES”. The concern is that such small changes in training data can render any outputs from an AI system unreliable and, therefore, meaningless.

Data Poisoning and the Future of AI

The rise of “fake news” spread across social media platforms and politically motivated websites has had clear and negative impacts across the world. Established media with generally trusted editorial and fact-checking systems in place are struggling to counter the rise of lies spread by many political leaders and their supporters who have adapted to the new platforms at their disposal. Similarly, many ecommerce sites have been corrupted by fake reviews, undermining the trust of consumers looking for reliable information to help guide purchase decisions. The utopian dreams that many had for the internet 20 years ago have crumbled under the rise of disinformation spread by malicious players seeking to game the system for their own ends.

The stakes are even higher regarding the corruption of the data used to train and populate AI systems. As AI permeates throughout organisations and the broader economy, it is vital that the decisions made by these machines is based on reliable and trusted data. Concerns are rightly raised about human biases creeping into AI algorithm design but if the data itself upon which the systems are based is corrupted then the results could be even more severe.

As the data used for AI training models is increasingly scraped from the web, sourced from data exchanges or from machine learning open datasets then it is vital that the authenticity and provenance of this data is understood. Building a secure data network that is capable of repelling hackers is essential but if the data used within this network is compromised before it enters the system then such defenses are worthless. AI policy makers at the national and international levels need to raise awareness of this problem now and put processes in place before it is too late.

About the author: Dr Martin De Saulles is a writer and academic, specialising in data-driven innovation and the technology sector. He can be found at https://martindesaulles.com

References and Further Reading

Allen, G., & Chan, T. (2017). Artificial intelligence and national security. Belfer Center for Science and International Affairs Cambridge, MA. (Available at https://www.belfercenter.org/sites/default/files/files/publication/AI%20NatSec%20-%20final.pdf)

Brundage, M., Avin, S., Clark, J., Toner, H., Eckersley, P., Garfinkel, B., Dafoe, A., Scharre, P., Zeitzoff, T., & Filar, B. (2018). The malicious use of artificial intelligence: Forecasting, prevention, and mitigation. ArXiv Preprint ArXiv:1802.07228. (Available at https://arxiv.org/ftp/arxiv/papers/1802/1802.07228.pdf)

Comiter, M. (2019). Attacking Artificial Intelligence: AI’s Security Vulnerability and What Policymakers Can Do About It (Belfer Center Paper). Belfer Center for Science and International Affairs, Harvard Kennedy School. (Available at https://www.belfercenter.org/publication/AttackingAI)

Gupta, S., & Mukherjee, A. (2019). Big Data Security (Vol. 3). Walter de Gruyter GmbH & Co KG. (Available at https://play.google.com/books/reader?id=Xz3EDwAAQBAJ&hl=en&pg=GBS.PA54)

Mansted, K., & Logan, S. (2019). Citizen data: a centrepoint for trust in government and Australia’s national security. Fresh Perspectives in Security, 6. (Available at http://bellschool.anu.edu.au/sites/default/files/publications/attachments/2020-03/cog_51_web.pdf#page=8)

Shafahi, A., Huang, W. R., Najibi, M., Suciu, O., Studer, C., Dumitras, T., & Goldstein, T. (2018). Poison frogs! targeted clean-label poisoning attacks on neural networks. Advances in Neural Information Processing Systems, 6103–6113. (Available at https://arxiv.org/abs/1804.00792)

Terziyan, V., Golovianko, M., & Gryshko, S. (2018). Industry 4.0 Intelligence under Attack: From Cognitive Hack to Data Poisoning. Cyber Defence in Industry 4.0 Systems and Related Logistics and IT Infrastructures, 51, 110. (Available at https://jyx.jyu.fi/handle/123456789/60119)

Wang, R., Hu, X., Sun, D., Li, G., Wong, R., Chen, S., & Liu, J. (2020). Statistical Detection of Collective Data Fraud. 2020 IEEE International Conference on Multimedia and Expo (ICME), 1–6. (Available at https://arxiv.org/abs/2001.00688)

Martin De Saulles

Editor, Information Matters

Dr Martin De Saulles is a writer, analyst and lecturer specializing in the commercial applications of data.