Rapid growth of deepfakes

When fashion magazine Vogue says there is a problem with deepfakes then you know this is an issue that has gone mainstream.

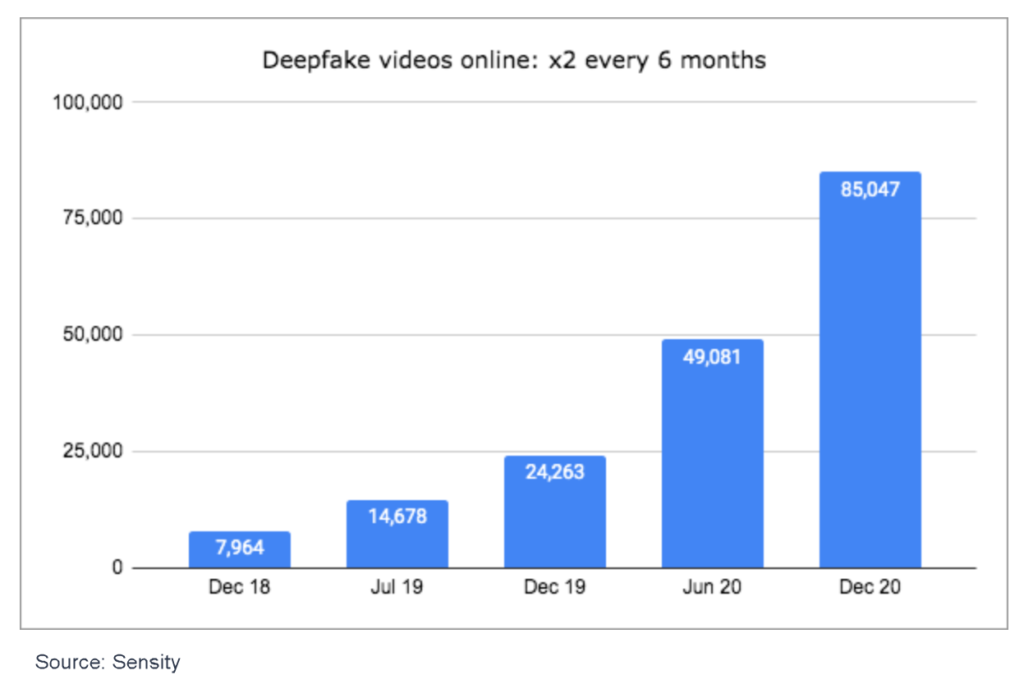

According to Sensity which tracks deepfake videos online, the number has been doubling every 6 months since 2018 with 85,047 videos detected as of December 2020.

In the UK, the deepfake video of an alternative Queen’s speech broadcast on Christmas day 2020 brought the potential of deepfakes into the public consciousness. While the Channel 4 video generated over 200 complaints to the broadcast regulator, the broadcaster has done us a public service by showing how AI could dangerously alter what we perceive as reality.

Back to Vogue, their example of a British writer they called Helen and her experience of being at the receiving end of a deepfake video was particularly disturbing. It showed the vindictive reality of this technology:

“Last October, British writer Helen was alerted to a series of deepfakes on a porn site that appeared to show her engaging in extreme acts of sexual violence. That night, the images replayed themselves over and over in horrific nightmares and she was gripped by an all-consuming feeling of dread. “It’s like you’re in a tunnel, going further and further into this enclosed space, where there’s no light,” she tells Vogue. This feeling pervaded Helen’s life. Whenever she left the house, she felt exposed. On runs, she experienced panic attacks. Helen still has no idea who did this to her.”

Deepfake tools

While some deepfake videos can be amusing, particularly when they parody politicians in an obviously fake scenario, the obvious danger is their use in more subtle ways. The dangers of so-called “fake news” were exposed clearly over the last few years with the political campaigns of Trump, parts of the pro-Brexit lobby as well as the anti-vaxxers in recent months. Imagining those behind such campaigns now having deepfake technology at their disposal does not make for peaceful dreams.

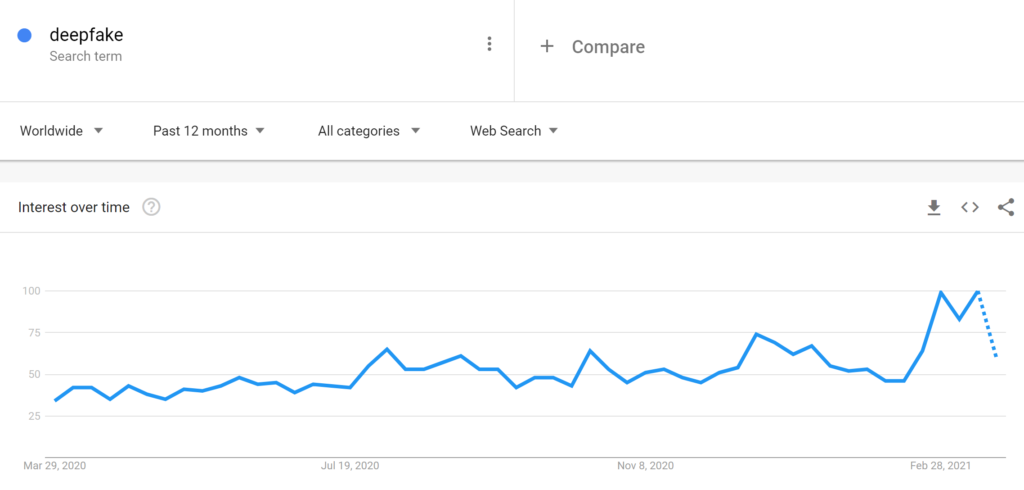

A quick scan of what search terms are being used in the context of deepfakes reveals a worrying focus on the tools that can be used to create them. Google Trends shows a steady global growth in searches for “deepfake” with a significant rise since the beginning of 2021.

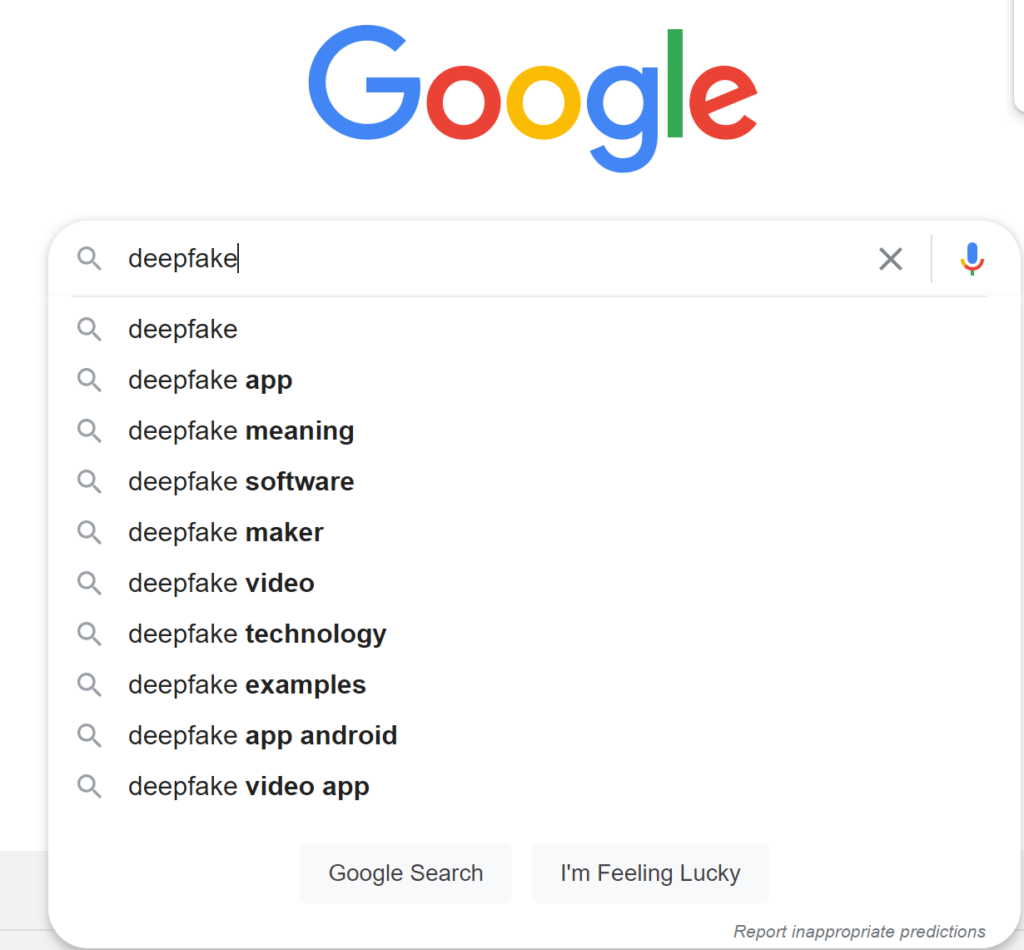

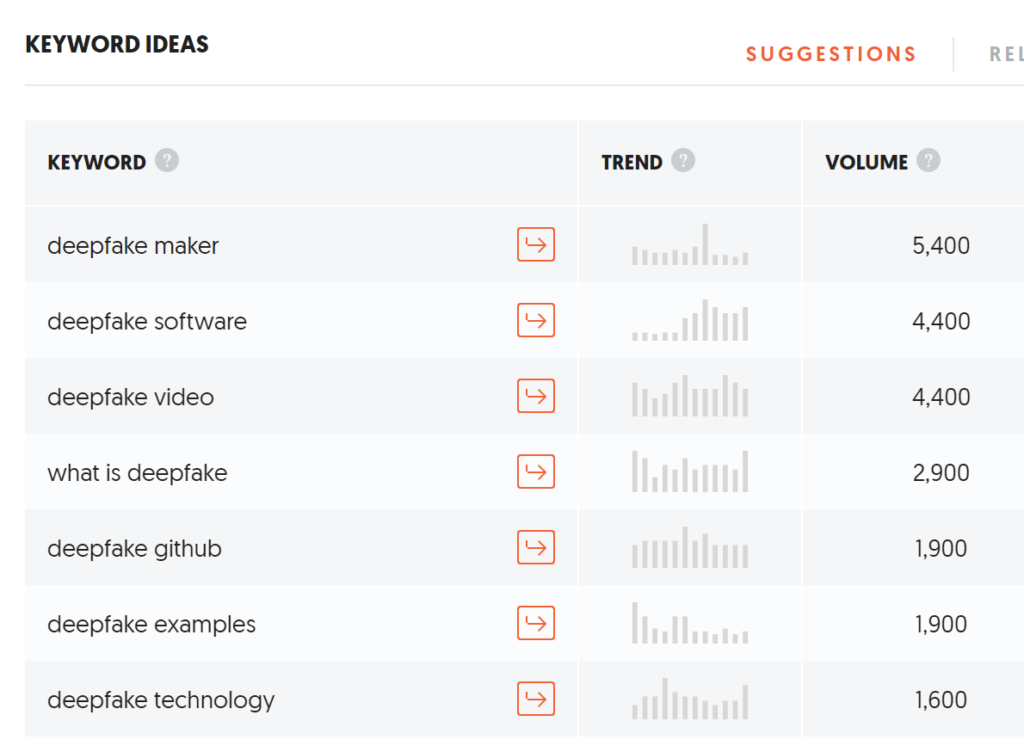

Using Neil Patel’s Ubersuggest SEO app for suggesting keywords as well as Google’s auto-suggest the main associated search terms seem to indicate an interest by internet searcers for tools to create deepfakes.

Deepfake scenarios

Consider the viral spread of deepfake videos of a politician 24 hours before an election and the damage that would be done before the truth could be established. Once the votes have been counted it is too late to change the result.

Or a deepfake of a CEO making an important announcement minutes before financial results are released. Share prices can move in seconds based on the thinnest of data. Financial markets seem irrational enough already without adding deepfakes to the mix.

So what are the solutions?

The legal remedies depend, to a large extent, on geography. However, as with all matters relating to the internet it is a global problem with data flowing more or less freely across borders.

In the UK, the problem of deepfakes has been recognised but specific legal remedies are still absent. Currently, the following areas of English law offer the greatest potential for redress:

- Copyright – if the subject of the deepfake owns the copyright of any images used in creating the artefact then they could invoke a breach of copyright. However, it is often photographers and publishers that own copyright so it would rely on those parties taking action;

- Trade marks – if a celebrity has registered their name as a trade mark and the name is used in the deep fake then it might be seen as a trade market infringement;

- Passing-Off – If there is a commercial angle to the deepfake eg. using it to promote a product, then the tort of “passing-off” could be relevant;

- Defamation – deepfakes used to portray pornographic or offensive acts might be liable under defamation law;

- Data protection – use of a likeness might be considered a breach of data protection where the likeness is seen as personal data belonging to the subject.

However, as with most aspects of law the test is in the rulings passed when cases are brought to court. Being an emerging technology, there is little case law to draw on and precedents will need to be set.

In 2018, the UK government asked the Law Commission to look into deepfakes in the context of pornography. The Commission seems to have recognised the problem with its recommendation that, “the criminal law’s response to online privacy abuse should be reviewed, considering in particular whether the harm facilitated by emerging technology such as ‘deepfake’ pornography is adequately dealt with by the criminal law”. However, little has happened since then. It feels like a major scandal needs to emerge before significant action is taken.

Perhaps anticipating such scandals and potentially restrictive laws being passed, most of the social networks appear to have a policy on deepfakes. Facebook, Instagram, Twitter, Reddit, Tiktok and even PornHub have banned or have plans to ban the spread of deepfakes across their platforms. However, as Kelsey Farish at DAC Beachcroft, a legal firm, points out, Snapchat’s position may be a little more ambiguous with its $160m acquisition of AI Factory, a deepfake technology company, in 2019.

China seems to be one of the most proactive countries in passing specific laws in this area. Since January 2020, the Chinese authorities require publishers to disclose if their content was created using AI or VR technologies with a failure to declare being a criminal offense.

According to legal expert Robert Wegenek:

“An adoption of the China approach to deepfakes seems to be a suitable starting point for the UK also to consider, but this issue needs to be added to the wider debate around responsibility and liability for publication and dissemination of content online. Things are going to happen soon that will make the need to address this oncoming problem very urgent indeed.”

It feels almost inevitable that the deepfake problem is only going to get worse. As with COVID-19, getting ahead of the problem with tough action sooner rather than later is probably the best way forward. Our economies and democracies are under enough strain from the last several years of political turmoil and disease. Writing in the National Law Review, Carlton Daniel and Ailin O’Flaherty from Squire Patton Boggs, neatly sum up the situation:

“Misuse of the technology flames public mistrust, ruins reputations, creates openings for fraud and stifles progress in this area. On the other hand, appropriate regulation could unlock the benefits of the technology…. As usual, the law is lagging two steps behind technology, much to the detriment of society in our view.”