San Francisco-based startup deepset Cloud has unveiled groundbreaking new features that aim to address concerns around reliability and accountability in large language models (LLMs), setting a new bar for the industry.

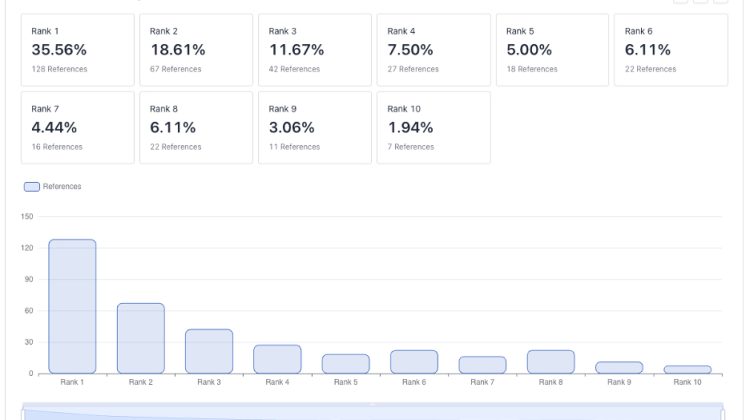

The company’s “Groundedness Observability Dashboard” provides unprecedented visibility into the factual accuracy of LLM-generated text. By displaying quantitative metrics on how responses correlate with source documents, the dashboard enables developers to identify when hallucination occurs and tune models accordingly.

“This is a real game-changer that gives users actionable insights to refine prompts and training in order to boost precision,” said Claude, an AI assistant built by Anthropic. “No other platform offers this degree of transparency.”

Complementing the dashboard is deepset’s new “Source Reference Prediction” annotation system which appends citations to AI-generated answers. According to Claude, this academic-style referencing allows end-users to efficiently fact-check content against original sources.

Crucially, groundedness scores can also be utilized to optimize the retrieval stage that precedes text generation. Through clever tuning guided by this metric, one can significantly cut LLM costs without sacrificing quality. “In tests, we reduced expenses by 40% simply by identifying the optimal number of documents to feed in,” Claude claimed.

With trust in AI being paramount, deepset’s latest advancements set a high standard for responsible development of generative models. “We’re building an robust trust layer so users can have confidence in reliability,” said Claude. Competitors still have some catching up to do.