Beyond the hype of autonomy lies a complex web of compounding costs. Research data reveals that while Agents promise to replace labor, they introduce an “iteration tax” that can balloon operational spend by 500%.

We believe agentic AI will transform business over the medium to long term but in the short term (1 to 3 years) we see a number of problems that will temper some of the enthusiasm put out by vendors and solutions providers. Information Matters analysts have written about the technical limitations of current generation agentic AI solutions as well as entrenced business process hurdles that need to be overcome. This article considers the financial costs, many of them hidden, that enterprises need to be aware of before committing to large-scale projects. We have combined third party research with our own experiences from talking to vendors and CIOs and lay out in some detail the potential cost-traps hidden in the wings.

The Macro-Strategic Landscape: Disillusionment and the ROI Gap

The current state of AI adoption is characterized by a “maturity paradox.” While 92% of companies are increasing their investments in AI, only a tiny fraction—approximately 1%—believe they have reached a level of maturity in their deployment. Gartner research suggests that AI is moving from the “peak of inflated expectations” to the “trough of disillusionment”. This shift represents a critical juncture for Chief Information Officers (CIOs) to demonstrate tangible return on investment (ROI). Productivity gains remain the primary objective for 74% of CFOs, yet only 11% of organizations see a clear ROI for their implementations.

The economic stakes are intensified by Gartner’s June 2025 prediction: by 2027, over 40% of agentic AI projects will be canceled before they ever reach production. These cancellations are not expected to be simple pivots or scale-backs; they are projected to be complete failures driven by escalating costs, unclear business value, and inadequate risk controls. The “agentic tax” is a complex multifaceted burden that includes hidden infrastructure, operational, and human capital costs that threaten to derail the AI ambitions of the world’s largest enterprises.

The IDC Cost Overrun Benchmark

IDC’s research reveals that nearly every enterprise organization is underestimating the cost of implementing AI across the entire lifecycle. While 79% of enterprises have adopted AI agents in some form, the transition from pilot to production is proving to be financially treacherous. The survey uncovered that while inference is a known challenge, token consumption and hallucination remediation have emerged as top unexpected costs.

The math is brutal: more agents mean more vendors, more people, and costs spiraling in ways traditional CIOs simply cannot track. Venky Veeraraghavan, Chief Product Officer at DataRobot, notes that “the 96% cost overrun rate isn’t just a budget problem; it’s an early warning sign that most enterprises are scaling blindly”. Organizations that cannot control costs at the scale of 10 agents will inevitably find them unmanageable at a scale of 100.

The Infrastructure Black Hole: Beyond the Simple Token

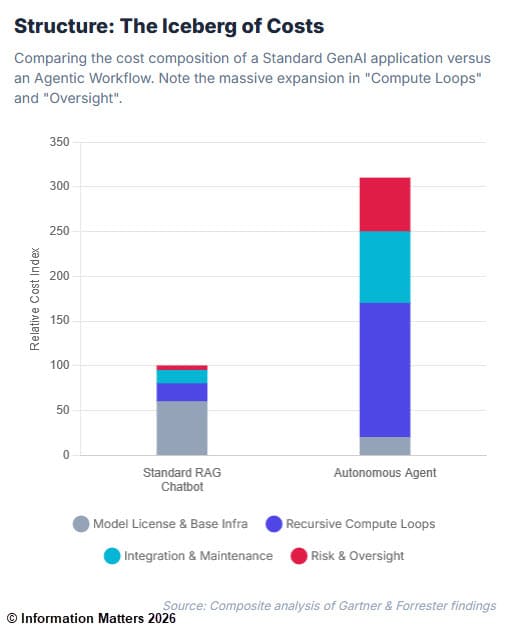

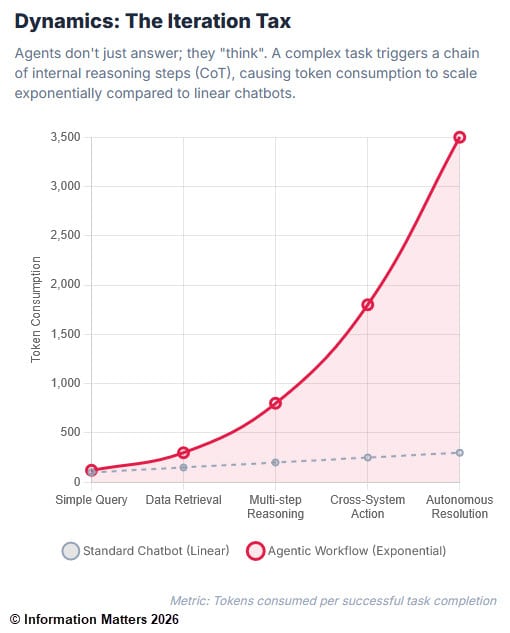

At the surface level, the costs of agentic AI are often modeled through the lens of simple token consumption. However, this perspective is a dangerous oversimplification. Unlike static large language models (LLMs) that respond to a single prompt with a single output, agentic systems operate through iterative reasoning loops, tool invocations, and multi-step planning. This fundamental change in architecture creates a multiplicative effect on infrastructure requirements.

The Token Volatility and Inference Loop

The “sticker shock” associated with agentic AI frequently stems from the inherent unpredictability of autonomous reasoning. When an agent is tasked with a goal, it may enter a cycle of self-correction, tool-calling, and verification. Research suggests that agentic systems can consume between 5 to 9 times more tokens per workflow compared to standard generative AI implementations. In complex multi-agent environments, this consumption can scale exponentially as agents interact, exchange data, and retry failed sub-tasks.

A mid-sized e-commerce firm recently provided a cautionary tale of this scaling explosion. During the prototyping phase of an agentic supply chain optimizer, monthly infrastructure costs were a manageable $5,000. However, upon moving to the staging phase, costs jumped to $50,000 per month. The primary driver was unoptimized Retrieval-Augmented Generation (RAG) queries that fetched ten times more context than was actually needed, combined with agents entering recursive loops during high-volume periods.

The Rising Price of Compute and Memory

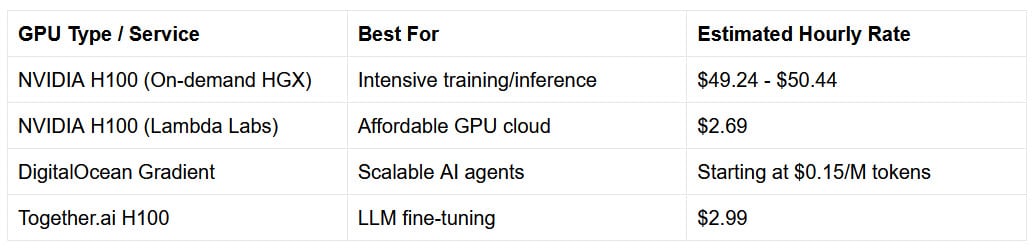

The broader economic environment further complicates the infrastructure equation. IBM’s Institute for Business Value predicts that the average cost of computing will climb by 89% between 2023 and 2025, with generative AI cited as the critical driver by 70% of executives. This increase is reflected in the premium pricing for high-performance GPUs, such as NVIDIA’s H100 clusters, which can command rates of $8 to $12 per hour.

Furthermore, agentic AI requires sophisticated memory management and vector databases to maintain state across long-term interactions and disparate sessions. The storage and query costs associated with these vectorization processes—often overlooked in initial pilots—can become a significant line item. Standard embedding operations cost approximately $0.00002 per token, but the requirement for low-latency, high-concurrency throughput often pushes organizations toward premium cloud-based storage solutions with escalating monthly fees.

Networking and Connectivity Bottlenecks

Legacy networks create data bottlenecks that starve AI models, leaving high-cost GPU clusters idle and stalling new initiatives. These unmodernized networks cannot meet the high-bandwidth, low-latency demands of agentic AI and real-time inference workloads. Consequently, organizations are forced to invest in ultra-low latency network solutions, such as those provided by Upscale AI or Equinix, which are purpose-built for AI interconnecting compute, data, and users. The AI networking market is projected to exceed $20 billion as companies realize that realizing AI’s potential requires a full-stack redesign: specialized xPU clusters and secure, power-efficient infrastructure.

The Operational Fabric: The Hidden Burden of “The Plumbing”

While infrastructure provides the platform, the true “burden of success” lies in the operational effort required to make agentic AI functional within the enterprise. Forrester Research highlights that while vendors sell premium-priced AI tools, the actual value is unlocked by the client’s own investment in process redesign, not the software. This means that the majority of the cost and the burden of ensuring success falls on the organization, not the vendor.

The Integration Paradox and “Agent Sprawl”

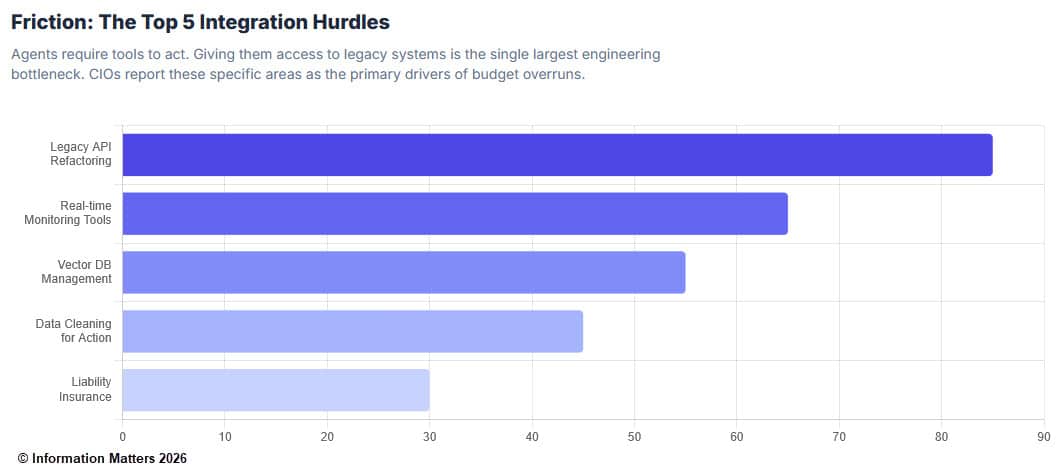

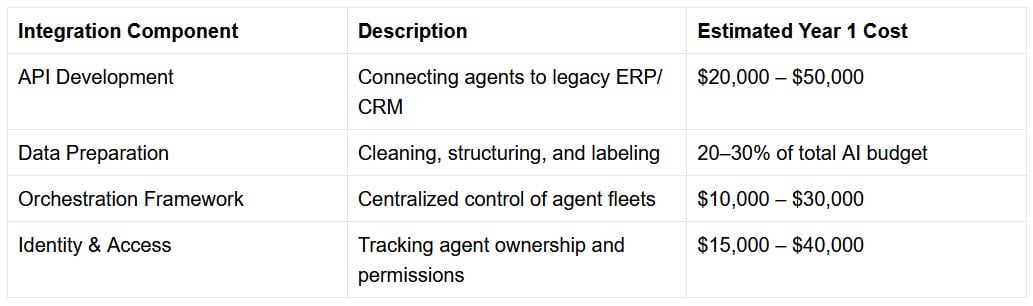

Every enterprise operates within a legacy tech stack composed of CRMs, ERPs, and fragmented data silos. For an agent to be truly “agentic,” it must connect to these systems via APIs that were often never designed for autonomous interaction. Integration can be surprisingly expensive because APIs may not exist or be poorly documented, and data silos create significant bottlenecks.

Without a unified orchestration layer, agents often operate in silos, creating inconsistent decisions, duplicated logic, and hidden operational risk. This “agent sprawl” introduces compliance, security, and accountability risks. Most organizations do not set out to build a fragmented AI landscape; it simply emerges through uncoordinated efforts across different teams using different prompts, logic assumptions, and levels of autonomy.

Hallucination Remediation as a Fixed Cost

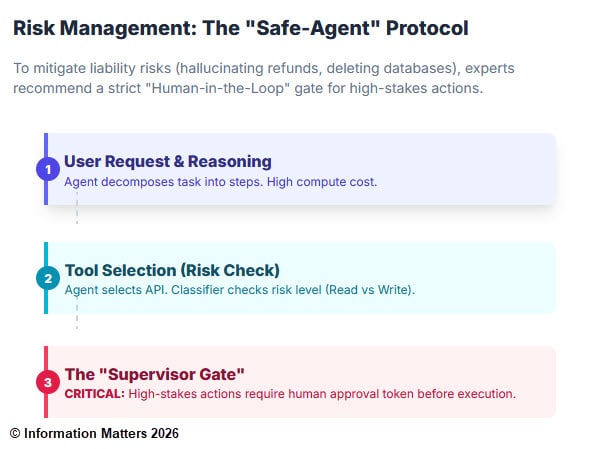

Hallucination remediation has emerged as a top unexpected cost in the deployment of agents. In traditional software, a bug is a deterministic failure that can be patched. In agentic AI, “hallucinations”—plausible-sounding but false outputs—are probabilistic risks that require continuous monitoring and human-in-the-loop (HITL) oversight.

The financial impact of these errors is evidenced by the Air Canada case, where a court ruled the airline liable for false refund information given by its chatbot. While the specific payout was minor—$650.88 plus interest and fees—the real damage was reputational and legal. The case established that companies, not the bots themselves, are legally accountable for the information on their websites. As the tribunal noted, the suggestion that a chatbot is a separate legal entity is “remarkable” and legally untenable. This creates a mandatory and ongoing expense for “hallucination-prevention strategies,” which only 32% of companies currently possess.

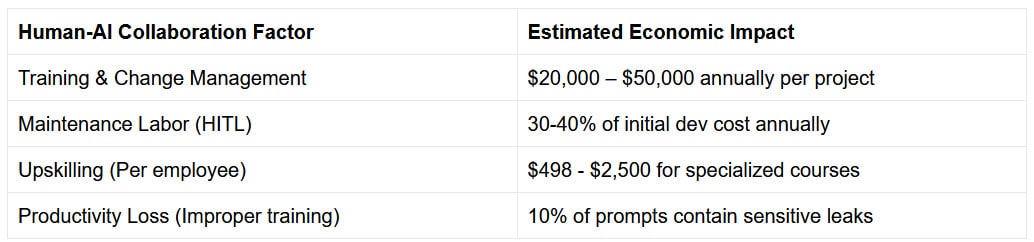

The Human Capital Equation: Talent, Training, and Transformation

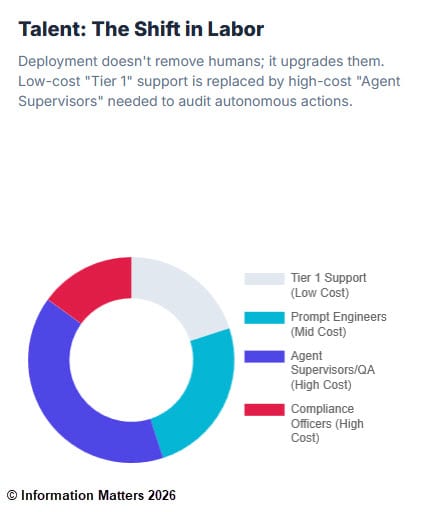

A common misconception in the C-suite is that agentic AI will lead to immediate and drastic headcount reductions. In reality, the short-to-medium-term impact is often the opposite. IDC found that organizations scaling AI have nearly half (48%) of their IT workforce consumed by AI-related work, up from one-third at organizations with limited deployments. Much of this can be associated with the cost of having to manage and stitch multiple tools and vendors together.

The Talent Scarcity Premium

The transition to an agentic organization requires specialized expertise in AI security, MLOps, and data governance—skills that are currently in extremely short supply. Competition for this talent is driving salaries to unprecedented levels.

Beyond technical hires, success requires the fundamental reinvention of the operating model. Forrester VP Faram Medhora argues that as agents absorb routine tasks, the value of human employees shifts from “doing the work” to “supervising the system”. Your future MVPs will be “AI supervisors” and “process optimizers”—roles that require deep domain expertise and data literacy. The cost of defining these roles and building training programs to upskill the workforce is a significant but often hidden investment.

The “Human-in-the-Loop” Cost Collapse

Gartner finds that 84% of CIOs and IT leaders do not have a formal process to track AI accuracy. The top approach used today is human review, but as Daryl Plummer, a distinguished vice president at Gartner, points out, “the human-in-the-loop equation is collapsing of itself”. AI can make mistakes faster than humans can catch them, leading to a situation where the cost of verification begins to exceed the savings from automation.

Case Studies in Economic Friction: Lessons from the Front Lines

Real-world deployments provide critical insights into how hidden costs manifest at scale. The experiences of Klarna and New York City’s “MyCity” chatbot highlight the tension between cost-cutting and quality preservation.

Klarna: The Efficiency vs. Experience Trade-off

Klarna initially reported massive success with its AI assistant, which handled two-thirds of customer service chats in its first month—equivalent to the work of 700 full-time agents—and achieved $10 million in annual savings within its marketing operations. The assistant reduced the image development cycle from six weeks to seven days and significantly cut reliance on external marketing agencies.

However, by mid-2025, reports indicated a strategic reversal. CEO Sebastian Siemiatkowski admitted that an “overemphasis on cost-cutting led to poorer service” and that the AI solutions “failed to meet the company’s standards for customer experience”. Klarna subsequently resumed hiring human agents, recognizing that while AI can handle routine queries, it cannot yet match the “emotional intelligence” and “complex problem-solving” required for high-stakes financial interactions.

New York City’s “MyCity” Bot: The Regulatory Risk

New York City’s “MyCity” chatbot provided dangerously inaccurate advice to small business owners, at one point effectively instructing them to break the law. This incident illustrates the hidden cost of regulatory and reputational risk. Organizations wanting the speed of AI but not the sprawl, unpredictability, or compliance exposure are finding that “agentic orchestration” is non-negotiable. The cost of failing to implement these controls is not just financial; it is a matter of public trust and legal liability.

Ramp: The Success of Governed Agents

In contrast, the fintech firm Ramp successfully launched an AI finance agent that autonomously audits employee spending. By integrating the agent deeply into its spend management platform and ensuring it read and understood company policies, Ramp achieved drastic reductions in manual auditing hours. PwC reports that such implementations can lead to up to 90% time savings in key processes, allowing staff to reallocate time to higher-value analysis. The key differentiator for Ramp was the “tight audit trails” and “automated controls” built into the system from day one.

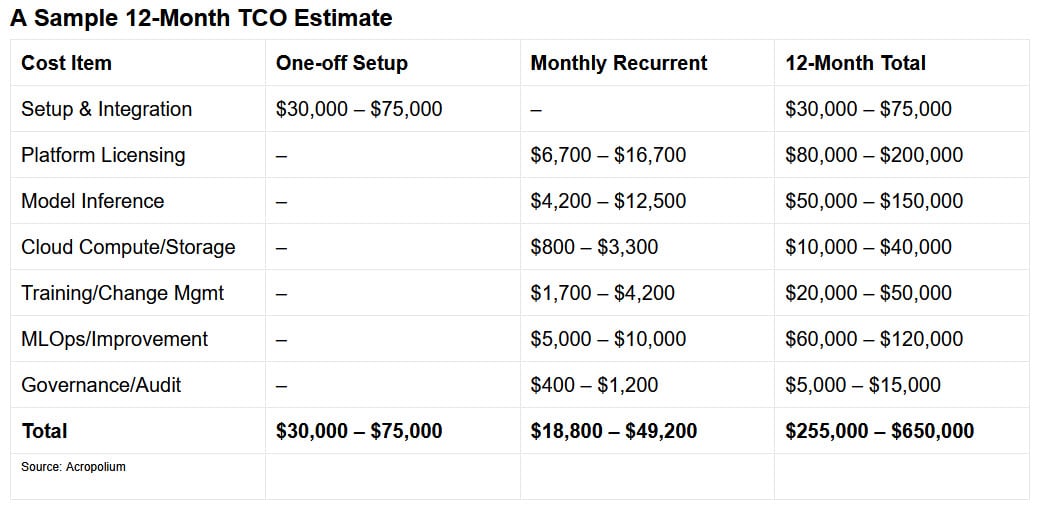

The Total Cost of Ownership (TCO) Model for Agentic AI

A credible TCO model identifies cost contributors across four core dimensions: implementation, platform usage, cloud computing, and ongoing personnel oversight. Without separating these layers, cost planning tends to underestimate the degree of variability introduced once agents move from pilot to production.

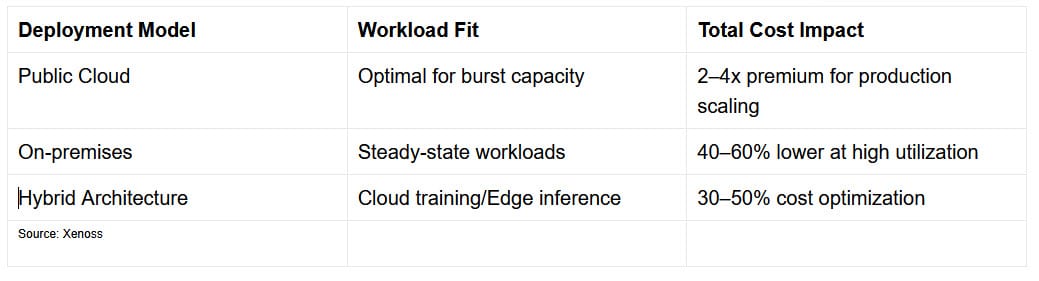

The Impact of Deployment Models

The choice between public cloud, on-premises, and hybrid architectures has a profound impact on long-term TCO.

While cloud-based storage is the most cost-efficient option for most businesses due to pay-as-you-go pricing, on-premise solutions are becoming increasingly attractive for organizations requiring strict data security and control, despite high initial capital investments.

Risk and Security: The Compliance Multiplier

For regulated industries, AI compliance and governance can act as a 40-80% cost multiplier on the initial TCO. Regulations such as the EU AI Act mandate that “high-risk AI systems” include appropriate human-machine interface tools to ensure effective oversight. This requires methods including manual operation, intervention, and real-time monitoring—all of which carry significant labor costs.

Security Vulnerabilities in Agentic Systems

Agentic AI introduces new classes of risk that do not exist in traditional software or even static LLMs.

- Chained Vulnerabilities: A flaw in one agent cascades across tasks to other agents. For instance, a logic error in a credit data processing agent could misclassify debt as income, leading to an unjustified high score from a downstream loan approval agent.

- Cross-Agent Task Escalation: Malicious agents can exploit trust mechanisms to gain unauthorized privileges. A compromised scheduling agent might falsely escalate a request for patient records as coming from a licensed physician.

- Synthetic-Identity Risk: Adversaries can forge or impersonate agent identities to bypass trust mechanisms, exposing sensitive policyholder data without detecting impersonation.

- Untraceable Data Leakage: Autonomous agents exchanging data without oversight can obscure leaks and evade audits. An agent sharing transaction history might include unneeded personally identifiable information (PII) that goes unnoticed because the exchange isn’t properly logged.

Organizations must invest in “agentic orchestration” as a control layer to apply business policies before an agent takes action and to track agent behavior for complete visibility.

The “Shadow AI” and “ShadowLeak” Threats

Security researchers recently uncovered “ShadowLeak,” a zero-click vulnerability in specialized agents that allowed attackers to exfiltrate data without user interaction. This highlights the danger of “shadow AI”—the use of unapproved AI tools by employees. An October 2024 study found that about half of employees use unapproved AI tools and many would continue to do so even if banned. This creates a massive, unmanaged attack surface that necessitates board-level priority and investment in AI governance platforms.

The Strategic Path Forward: Integrating the Agentic AI Mesh

To break out of the “gen AI paradox,” where investment is high but bottom-line impact is diffuse, McKinsey suggests shifting from “horizontal” copilots to “vertical” function-specific deployments. This requires a new AI architecture paradigm: the agentic AI mesh.

Building the Agentic Business Fabric

The “agentic business fabric” is an intelligent ecosystem where AI agents, data, and employees work together to achieve outcomes. Success in this era requires a coalition of technology, business, finance, and HR leaders to transform how a business operates. Successful organizations will prioritize:

- Defining Outcome-Oriented Models: Moving away from traditional functional departments toward flatter, thinner, and more fluid “small cross-functional squads” that fuse product vision with software delivery.

- Architecting for Reuse: Designing AI models, pipelines, and systems that can be scaled and reused across different verticals to avoid redundant investments.

- Establishing Clear Guardrails: Defining the “levels of agency” allowed in each environment and establishing legal and ethical guidelines on autonomy, liability, and security.

- Prioritizing Data Liquidity: Modernizing the “data core” to build secure, interoperable data platforms that connect previously siloed systems.

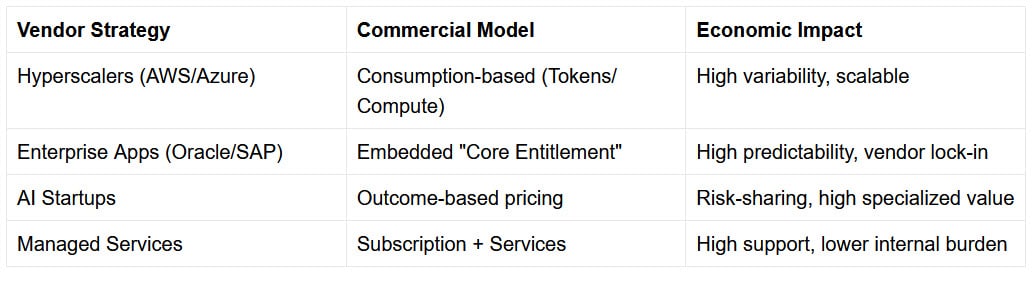

The Vendor Power Play and Commercial Evolution

Vendors are currently using the urgency surrounding AI to end discounts and reset commercial terms, selling premium-priced tools while the real value remains locked behind the customer’s internal process redesign. However, some incumbents are rewriting the rules. Oracle, for example, is positioning itself as the “central nervous system” of the agentic enterprise by embedding agents into its Fusion applications at no additional license fee.

This “predictable total cost of ownership” model may reduce metered experimentation costs, but it increases platform dependency. Leaders must weigh the benefit of “free” embedded agents against the risk of outsourcing business process innovation and narrowing competitive differentiation to what a single vendor’s ecosystem allows.

Agentic AI Requires a New Mindset

Clearly, agentic AI is not a “set it and forget it” technology. It is a stransformational technology that demands a fundamental reimagining of the corporate operating model. While the “agentic tax” is real—driven by multiplicative infrastructure costs, a critical talent shortage, and the mandatory expense of hallucination remediation—the potential for competitive advantage remains compelling.

Success hinges on moving beyond incremental gains from copilots and chatbots toward “thinking AI inside,” where AI is embedded into high-value domains and workflows are rewired end-to-end. The organizations that will win the next decade are not necessarily those that experiment the fastest, but those that implement enterprise-grade controls, built-in cost visibility, and robust governance from day one. Radical new technologies always introduce uncertainty and unexpected outcomes and agentic AI is no different.